Indexing with Solr Cell and Apache Tika

If the documents you need to index are in a binary format, such as Word, Excel, PDFs, etc., Solr includes a request handler which uses Apache Tika to extract text for indexing to Solr.

Apache Tika incorporates many different file-format parsers such as Apache PDFBox and Apache POI to extract the text content and metadata from files.

Solr’s ExtractingRequestHandler uses Apache Tika via an external Tika Server to extract text and metadata from binary files.

When this framework was under development, it was called the Solr Content Extraction Library, or CEL; from that abbreviation came this framework’s name: Solr Cell.

The names Solr Cell and ExtractingRequestHandler are used

interchangeably for this feature.

Key Solr Cell Concepts

When using the Solr Cell framework, it is helpful to keep the following in mind:

-

Tika will automatically attempt to determine the input document type (e.g., Word, PDF, HTML) and extract the content appropriately. If you like, you can explicitly specify a MIME type for Tika with the

stream.typeparameter. See http://tika.apache.org/1.28.5/formats.html for the file types supported. -

Briefly, Tika internally works by synthesizing an XHTML document from the core content of the parsed document, which is passed to a configured SAX ContentHandler provided by Solr Cell. Solr responds to SAX events to create one or more text fields from the content. Tika exposes document metadata as well (apart from the XHTML).

-

Tika produces metadata such as

dc:title,dc:subject, anddc:authoraccording to specifications such as the DublinCore. The metadata available is highly dependent on the file types and what they, in turn, contain. Some of the general metadata created is described in the section Metadata Created by Tika below. Solr Cell supplies some metadata of its own too. -

Solr Cell concatenates text from the internal XHTML into a

contentfield. You can configure which elements should be included/ignored, and which should map to another field. -

Solr Cell maps each piece of metadata onto a field. By default, it maps to the same name, but several parameters control how this is done.

-

When Solr Cell finishes creating the internal

SolrInputDocument, the rest of the indexing stack takes over. The next step after any update handler is the Update Request Processor chain.

Module

This is provided via the extraction Solr Module that needs to be enabled before use.

The "techproducts" example included with Solr is pre-configured to have Solr Cell configured. If you are not using the example, you will want to pay attention to the section solrconfig.xml Configuration below.

Extraction Backends

The ExtractingRequestHandler supports multiple backends, selectable with the extraction.backend parameter. The only backend currently supported is the tikaserver backend, which uses an external Tika server process to do the extraction.

Tika Server

Solr delegates content extraction to an external Apache Tika Server process. This provides operational isolation (crashes or heavy parsing won’t impact Solr), simplifies dependency management, and allows you to scale Tika independently of Solr.

Example handler configuration:

<requestHandler name="/update/extract" class="solr.extraction.ExtractingRequestHandler">

<!-- Point Solr to your Tika Server (required) -->

<str name="tikaserver.url">http://localhost:9998</str>

</requestHandler>Starting Tika Server with Docker

The quickest way to run Tika Server for development is using Docker. The examples below expose Tika on port 9998 on localhost for convenience, matching the handler configuration above.

docker run --rm -p 9998:9998 --name tika -d apache/tika:3.2.3.0-full

If Solr runs in Docker too, ensure both containers share a network and use the Tika container name as the host in tikaserver.url.

|

Trying out Solr Cell

You can try out the Tika framework using the schemaless example included in Solr.

First we start a tika server on port 9998, using Docker.

# Start Tika Server in the background

docker run --rm -p 9998:9998 --name tika -d apache/tika:3.2.3.0-full

# To stop the server when done, run `docker stop tika`The commands below will start Solr, create a core/collection named gettingstarted with the _default configset, and enable the extraction module. Then the /update/extract handler is added to the gettingstarted core/collection to enable Solr Cell.

bin/solr start -e schemaless -Dsolr.modules=extraction

# Configure the handler

curl -X POST -H 'Content-type:application/json' -d '{

"add-requesthandler": {

"name": "/update/extract",

"class": "solr.extraction.ExtractingRequestHandler",

"tikaserver.url": "http://localhost:9998",

"defaults":{

"lowernames": "true",

"captureAttr":"true"

}

}

}' 'http://localhost:8983/solr/gettingstarted/config'Once Solr is started, you can use curl to send a sample PDF included with Solr via HTTP POST:

curl 'http://localhost:8983/solr/gettingstarted/update/extract?literal.id=doc1&commit=true' -F "myfile=@example/exampledocs/solr-word.pdf"The URL above calls the ExtractingRequestHandler, uploads the file solr-word.pdf, and assigns it the unique ID doc1.

Here’s a closer look at the components of this command:

-

The

literal.id=doc1parameter provides a unique ID for the document being indexed. Without this, the ID would be set to the absolute path to the file.There are alternatives to this, such as mapping a metadata field to the ID, generating a new UUID, or generating an ID from a signature (hash) of the content.

-

The

commit=true parametercauses Solr to perform a commit after indexing the document, making it immediately searchable. For optimum performance when loading many documents, don’t call the commit command until you are done. -

The

-Fflag instructs curl to POST data using the Content-Typemultipart/form-dataand supports the uploading of binary files. The@symbol instructs curl to upload the attached file. -

The argument

myfile=@example/exampledocs/solr-word.pdfuploads the sample file. Note this includes the path, so if you upload a different file, always be sure to include either the relative or absolute path to the file.

You can also use bin/solr post to do the same thing:

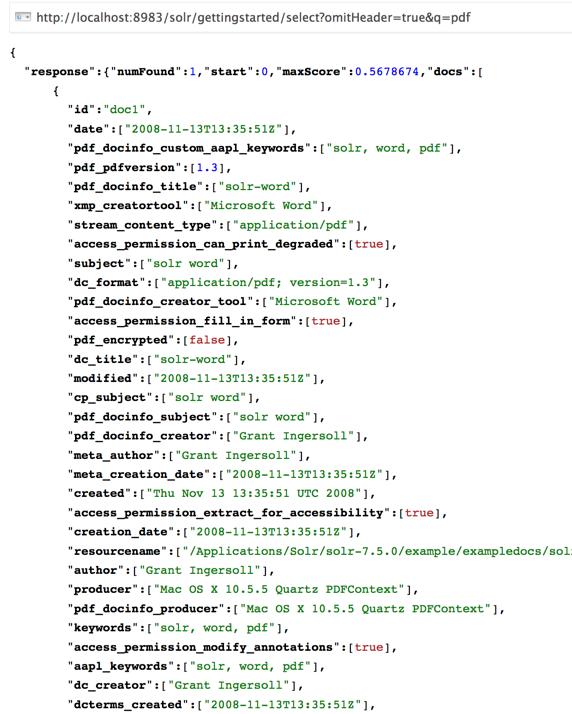

$ bin/solr post -c gettingstarted example/exampledocs/solr-word.pdf --params "literal.id=doc1"Now you can execute a query and find that document with a request like http://localhost:8983/solr/gettingstarted/select?q=pdf.

The document will look something like this:

You may notice there are many metadata fields associated with this document. Solr’s configuration is by default in "schemaless" (data driven) mode, and thus all metadata fields extracted get their own field.

You might instead want to ignore them generally except for a few you specify.

To do that, use the uprefix parameter to map unknown (to the schema) metadata field names to a schema field name that is effectively ignored.

The dynamic field ignored_* is good for this purpose.

For the fields you do want to map, explicitly set them using fmap.IN=OUT and/or ensure the field is defined in the schema.

Here’s an example:

$ bin/solr post -c gettingstarted example/exampledocs/solr-word.pdf --params "literal.id=doc1&uprefix=ignored_&fmap.last_modified=last_modified_dt"|

The above example won’t work as expected if you run it after you’ve already indexed the document one or more times. Previously we added the document without these parameters, so all fields were added to the index at that time.

The The easiest way to try out the |

ExtractingRequestHandler Parameters and Configuration

Solr Cell Parameters

The following parameters are accepted by the ExtractingRequestHandler.

These parameters can be set for each indexing request (as request parameters), or they can be set for all requests to the request handler by defining them in solrconfig.xml.

capture-

Optional

Default: none

Captures XHTML elements with the specified name for a supplementary addition to the Solr document. This parameter can be useful for copying chunks of the XHTML into a separate field. For instance, it could be used to grab paragraphs (

<p>) and index them into a separate field. Note that content is still also captured into thecontentfield.Example:

capture=p(in a request) or<str name="capture">p</str>(insolrconfig.xml)Output:

"p": {"This is a paragraph from my document."}This parameter can also be used with the

fmap.source_fieldparameter to map content from attributes to a new field. captureAttr-

Optional

Default:

falseIndexes attributes of the Tika XHTML elements into separate fields, named after the element. If set to

true, when extracting from HTML, Tika can return the href attributes in<a>tags as fields named “a”.Example:

captureAttr=trueOutput:

"div": {"classname1", "classname2"} commitWithin-

Optional

Default: none

Issue a commit to the index within the specified number of milliseconds.

Example:

commitWithin=10000(10 seconds) defaultField-

Optional

Default: none

A default field to use if the

uprefixparameter is not specified and a field cannot otherwise be determined.Example:

defaultField=_text_ extraction.backend-

Optional

Default:

tikaserverSpecifies the backend to use for extraction. As of Solr 10, only

tikaserveris supported. This parameter is optional sincetikaserveris the only available backend.Example: In

solrconfig.xml:<str name="extraction.backend">tikaserver</str>. extractOnly-

Optional

Default:

falseIf

true, returns the extracted content from Tika without indexing the document. This returns the extracted XHTML as a string in the response. When viewing on a screen, it may be useful to set theextractFormatparameter for a response format other than XML to aid in viewing the embedded XHTML tags.Example:

extractOnly=true extractFormat-

Optional

Default:

xmlControls the serialization format of the extract content. The options are

xmlortext. Thexmlformat is actually XHTML, the same format that results from passing the-xcommand to the Tika command line application, while the text format is like that produced by Tika’s-tcommand.This parameter is valid only if

extractOnlyis set to true.Example:

extractFormat=textOutput: For an example output (in XML), see https://cwiki.apache.org/confluence/display/solr/TikaExtractOnlyExampleOutput.

fmap.source_field-

Optional

Default: none

Maps (moves) one field name to another. The

source_fieldmust be a field in incoming documents, and the value is the Solr field to map to.Example:

fmap.content=textcauses the data in thecontentfield generated by Tika to be moved to the Solr’stextfield. ignoreTikaException-

Optional

Default: none

If

true, exceptions found during processing will be skipped. In some cases, there may be metadata available that will be indexed.Example:

ignoreTikaException=true literal.fieldname-

Optional

Default: none

Populates a field with the name supplied with the specified value for each document. The data can be multivalued if the field is multivalued.

Example:

literal.doc_status=publishedOutput:

"doc_status": "published" literalsOverride-

Optional

Default:

trueIf

true, literal field values will override other values with the same field name.If

false, literal values defined withliteral.fieldnamewill be appended to data already in the fields extracted from Tika. When settingliteralsOverridetofalse, the field must be multivalued.Example:

literalsOverride=false lowernames-

Optional

Default:

falseIf

true, all field names will be mapped to lowercase with underscores, if needed.Example:

lowernames=trueOutput: Assuming input of "Content-Type", the result in documents would be a field

content_type multipartUploadLimitInKB-

Optional

Default:

2048kilobytesDefines the size in kilobytes of documents to allow. If you have very large documents, you should increase this or they will be rejected.

Example:

multipartUploadLimitInKB=2048000 passwordsFile-

Optional

Default: none

Defines a file path and name for a file of file name to password mappings. See the section Indexing Encrypted Documents for more information about using a password file.

Example:

passwordsFile=/path/to/passwords.txt

tikaserver.maxChars-

Optional

Default: 100 MBytes

Sets a hard limit on the number of bytes Solr will accept from the Tika Server response body when using the

tikaserverbackend. If the extracted content exceeds this limit, the request will fail with HTTP 400 (Bad Request).This parameter can only be configured in the request handler configuration (

solrconfig.xml), not per request.Example: In

solrconfig.xml:<long name="tikaserver.maxChars">1000000</long> tikaserver.recursive-

Optional

Default: false

Controls whether Tika Server should recursively extract text from embedded documents (e.g., attachments in emails, embedded files in archives). Set to

trueto enable recursive extraction. resource.name-

Optional

Default: none

Specifies the name of the file to index. This is optional, but Tika can use it as a hint for detecting a file’s MIME type.

Example:

resource.name=mydoc.doc resource.password-

Optional

Default: none

Defines a password to use for a password-protected PDF or OOXML file. See the section Indexing Encrypted Documents for more information about using this parameter.

Example:

resource.password=secret tikaserver.timeoutSeconds-

Optional

Default:

180secondsSets the HTTP timeout when communicating with Tika Server, in seconds. Can be set per request as a parameter or as a default in the request handler configuration. If set on the request it overrides the handler default for that call only.

Examples:

-

Per request:

tikaserver.timeoutSeconds=60 -

In

solrconfig.xml:<int name="tikaserver.timeoutSeconds">60</int>

-

tikaserver.url-

Required

Default: none

Specifies the URL of the Tika Server instance to use for content extraction. This parameter is required and can only be configured in

solrconfig.xml, not per request.If your TikaServer is using HTTPS, it needs to use a verifiable SSL certificate. If using self-signed or custom Certificate Authority, you will need to add those to Solr’s Truststore. The

tikaserverbackend currently does not have support for configuring custom certificates for Tika alone.Example: In

solrconfig.xml:<str name="tikaserver.url">http://localhost:9998</str>;. uprefix-

Optional

Default: none

Prefixes all fields that are undefined in the schema with the given prefix. This is very useful when combined with dynamic field definitions.

Example:

uprefix=ignored_would addignored_as a prefix to all unknown fields. In this case, you could additionally define a rule in the Schema to not index these fields:<dynamicField name="ignored_*" type="ignored" /> xpath-

Optional

Default: none

When extracting, only return Tika XHTML content that satisfies the given XPath expression. See http://tika.apache.org/1.28.5/ for details on the format of Tika XHTML, it varies with the format being parsed. Also see the section Defining XPath Expressions for an example.

solrconfig.xml Configuration

If you have started Solr with one of the supplied example configsets, you may already have the ExtractingRequestHandler configured by default.

First, the extraction module must be enabled.

This can be done by specifying the environment variable SOLR_MODULES=extraction in your startup configuration.

You can then configure the ExtractingRequestHandler in solrconfig.xml.

The following is the default configuration found in Solr’s sample_techproducts_configs configset, which you can modify as needed:

<requestHandler name="/update/extract"

startup="lazy"

class="solr.extraction.ExtractingRequestHandler" >

<lst name="defaults">

<str name="lowernames">true</str>

<str name="fmap.content">_text_</str>

</lst>

</requestHandler>In this setup, all field names are lower-cased (with the lowernames parameter), and Tika’s content field is mapped to Solr’s text field.

|

You may need to configure Update Request Processors (URPs) that parse numbers and dates and do other manipulations on the metadata fields generated by Solr Cell. In Solr’s If you instead explicitly define the fields for your schema, you can selectively specify the desired URPs.

An easy way to specify this is to configure the parameter The above-suggested list was taken from the list of URPs that run as a part of schemaless mode and provide much of its functionality.

However, one major part of the schemaless functionality is missing from the suggested list, |

Parser-Specific Properties

Parser-specific properties for Tika must be configured directly on your Tika Server instance. Consult the Apache Tika documentation for details.

| In earlier versions of Solr Cell you could supply Tika configuration directly to Solr. This is no longer possible. |

Indexing Encrypted Documents

The ExtractingRequestHandler will decrypt encrypted files and index their content if you supply a password in either resource.password in the request, or in a passwordsFile file.

In the case of passwordsFile, the file supplied must be formatted so there is one line per rule.

Each rule contains a file name regular expression, followed by “=”, then the password in clear-text.

Because the passwords are in clear-text, the file should have strict access restrictions.

# This is a comment

myFileName = myPassword

.*\.docx$ = myWordPassword

.*\.pdf$ = myPdfPasswordExtending the ExtractingRequestHandler

If you want to supply your own ContentHandler for Solr to use, you can extend the ExtractingRequestHandler and override the createFactory() method.

This factory is responsible for constructing the SolrContentHandler that interacts with Tika, and allows literals to override Tika-parsed values.

Set the parameter literalsOverride, which normally defaults to true, to false to append Tika-parsed values to literal values.

Solr Cell Internals

Metadata Created by Tika

As mentioned earlier, Tika produces metadata about the document. Metadata describes different aspects of a document, such as the author’s name, the number of pages, the file size, and so on. The metadata produced depends on the type of document submitted. For instance, PDFs have different metadata than Word documents do.

Metadata Added by Solr

In addition to the metadata added by Tika’s parsers, Solr adds the following metadata:

-

stream_name: The name of the Content Stream as uploaded to Solr. Depending on how the file is uploaded, this may or may not be set. -

stream_source_info: Any source info about the stream. -

stream_size: The size of the stream in bytes. -

stream_content_type: The content type of the stream, if available.

It’s recommended to use the extractOnly option before indexing to discover the values Solr will set for these metadata elements on your content.

|

Order of Input Processing

Here is the order in which the Solr Cell framework processes its input:

-

Tika generates fields or passes them in as literals specified by

literal.<fieldname>=<value>. IfliteralsOverride=false, literals will be appended as multi-value to the Tika-generated field. -

If

lowernames=true, Tika maps fields to lowercase. -

Tika applies the mapping rules specified by

fmap.source=targetparameters. -

If

uprefixis specified, any unknown field names are prefixed with that value, else ifdefaultFieldis specified, any unknown fields are copied to the default field.

Solr Cell Examples

Using capture and Mapping Fields

The command below captures <h1> tags separately (capture=h1), and then maps all the instances of that field to a dynamic field named foo_t (fmap.h1=foo_t).

$ bin/solr post -c gettingstarted example/exampledocs/sample.html --params "literal.id=doc2&captureAttr=true&defaultField=_text_&fmap.h1=foo_t&capture=h1"Using Literals to Define Custom Metadata

To add in your own metadata, pass in the literal parameter along with the file:

$ bin/solr post -c gettingstarted --params "literal.id=doc4&captureAttr=true&defaultField=text&capture=div&fmap.div=foo_t&literal.blah_s=Bah" example/exampledocs/sample.htmlThe parameter literal.blah_s=Bah will insert a field blah_s into every document.

Every instance of the text will be "Bah".

Defining XPath Expressions

The example below passes in an XPath expression to restrict the XHTML returned by Tika:

$ bin/solr post -c gettingstarted --params "literal.id=doc5&captureAttr=true&defaultField=text&capture=h1&fmap.h1=foo_t&xpath=/xhtml:html/xhtml:body/xhtml:h1//node()" example/exampledocs/sample.htmlExtracting Data without Indexing

Solr allows you to extract data without indexing. You might want to do this if you’re using Solr solely as an extraction server or if you’re interested in testing Solr extraction.

The example below sets the extractOnly=true parameter to extract data without indexing it.

curl "http://localhost:8983/solr/gettingstarted/update/extract?&extractOnly=true" --data-binary @example/exampledocs/sample.html -H 'Content-type:text/html'The output includes XML generated by Tika (and further escaped by Solr’s XML) using a different output format to make it more readable (-out yes instructs the tool to echo Solr’s output to the console):

$ bin/solr post -c gettingstarted --params "extractOnly=true&wt=json&indent=true" --verbose example/exampledocs/sample.htmlUsing Solr Cell with a POST Request

The example below streams the file as the body of the POST, which does not, then, provide information to Solr about the name of the file.

curl "http://localhost:8983/solr/gettingstarted/update/extract?literal.id=doc6&defaultField=text&commit=true" --data-binary @example/exampledocs/sample.html -H 'Content-type:text/html'Using Solr Cell with SolrJ

SolrJ is a Java client that you can use to add documents to the index, update the index, or query the index. You’ll find more information on SolrJ in SolrJ.

Here’s an example of using Solr Cell and SolrJ to add documents to a Solr index.

First, let’s use SolrJ to create a new SolrClient, then we’ll construct a request containing a ContentStream (essentially a wrapper around a file) and sent it to Solr:

public class SolrCellRequestDemo {

public static void main (String[] args) throws IOException, SolrServerException {

SolrClient client = new HttpSolrClient.Builder("http://localhost:8983/solr/my_collection").build();

ContentStreamUpdateRequest req = new ContentStreamUpdateRequest("/update/extract");

req.addFile(new File("my-file.pdf"));

req.setParam(ExtractingParams.EXTRACT_ONLY, "true");

NamedList<Object> result = client.request(req);

System.out.println("Result: " + result);

}

}This operation streams the file my-file.pdf into the Solr index for my_collection.

The sample code above calls the extract command, but you can substitute other commands that are supported by Solr Cell.

The key class to use is the ContentStreamUpdateRequest, which makes sure the ContentStreams are set properly.

SolrJ takes care of the rest.

Note that the ContentStreamUpdateRequest is not just specific to Solr Cell.

You can send CSV to the CSV Update handler and to any other Request Handler that works with Content Streams for updates.